Be happy with Data Flow Integration Technology

Context:

For a lot time, I thought how can I solve the problem between the Data Engineers and operational areas and the relation with the development life cycle in the data flow integrations.

To be clear we have three areas:

Operations: They monitor the solutions, fix and solve the problems in the production environments, and make posible the application health.

Developers: They make the code, thinking in performance aspects (the final product).

Data Architects: They have to design and translate the business needs and represent the solution architecture, that will be developed by the development team and later managed and monitored by the operations team.

So. What is the context?

We have too many skills involved in this process, and not all players think in the same way when think in a solution.

This is because, the developer teams will think in terms of the performance and code, operators will think in terms about the manage and monitoring the solution and the Architect have to think in both at the moment zero when he design the solution.

So, this is the context.... "we had to select a solution to solve our data flow needs, in the context of a large company and simplifying the processes between the operations and development areas, making posible an improvement in the development life cicle ".

So, in this context we selected apache nifi to solve our data flow needs... Why?.

Apache Nifi , is an open source tool for Data Flow between systems on the same site or not (between remote sites).

It`s simple to use, quick to learn and if you need, you can buy hortonworks support (they built nifi and released it to the community).

Attributes by which we select this solution:

-

Simplicity: We want a solution that has simple to development and simple in terms for the operation teams.... --> Monitoring, easy to understand "what this integration is trying to do", easy to debug.

-

Time to market: Many times, the big data integrations are complex and the process to put in production the code is not easy. We have many processes to transfer the knowledge to Operation teams and develop the monitoring processes to check the integration's health.

In this case, apache nifi give us the possibility to simplify this process and make an a unique point to develop our data flow integrations (not stream processing). -

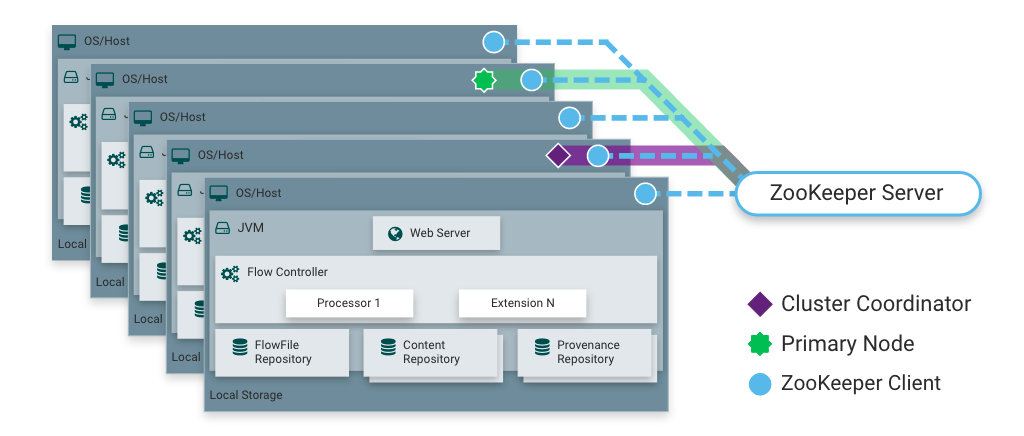

Scalable: The horizontal schema to scale the solution and the hardware commodity philosophy, brind as the posibility to speed up our growth if we need it.

-

Visual command and control: The nifi UI make simple the development process and makes possible the coexistence between the operative and development area. Allowing to operators, understand the integration more easily and developers can achieve deliverables in less time.

-

Clustering: NiFi is designed to scale-out through the use of clustering many nodes together as described in the next image.

-

Security: System to system a dataflow is only as good as it is secure. NiFi at every point in a dataflow offers secure exchange through the use of protocols with encryption such as 2-way SSL. Also, you can manage the security access to nifi with Ldap / AD to make more easy your security schema.

-

Guaranteed Delivery: The solution, must have fault tolerance and guaranteed the data delivery because we have very sensitive data due to regulatory and legal conditions on this data. The integration is one of the points where the desaster could be a reality. In this case, nifi makes the work be safe and garantue the data delivery between the source and target system.

This is achieved through effective use of a purpose-built persistent write-ahead log and content repository. Together they are designed in such a way as to allow for very high transaction rates, effective load-spreading, copy-on-write, and play to the strengths of traditional disk read/writes. (nifi doc )

My uses cases:

At this moment i work in the bigest ISP and telco provider in Argentina in the Data Architect role (Big Data architecture is included :p ).

The uses cases to think in a data flow solutions are a lot, but I can list some examples:

DHCP logs: This is a real problem for us, because this data is very very critical. When the police or justice ask to my compañy "who has this ip address at this moment (day:hour:minute)" we have to response this question with presition and quickly. So... here we have "quality attributes":

- Security: Because this info is sensitive and critic.

- Guaranteed Delivery: We can´t lost data in the dataflow process. Imagine if the justice ask to my company about some case, and you dont have the correct response?.

- Scalable: Every year this data source increase it volume. We need scale it quick and the fault tolerance schema is needed.

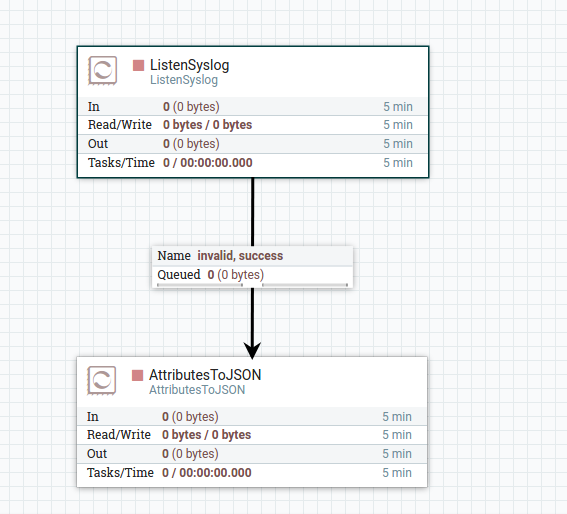

Data Center Logs: Nifi is amazing for this, because we need integrate syslogs from our servers and nifi works fine with this. It have a Syslog listener and this one parse the syslog message.

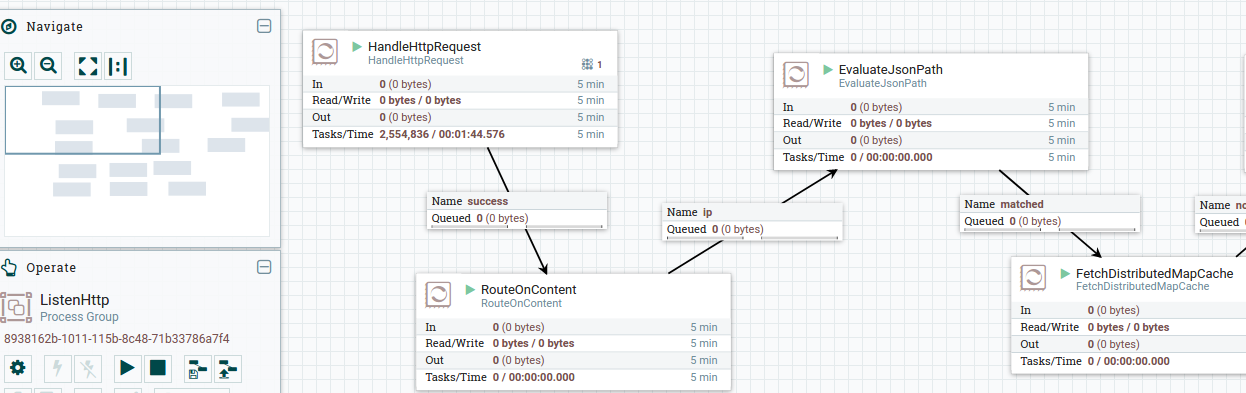

Service integrations: We recibe many times, data from systems where we can't go to get the data from the source system, and the provider give us the possibility to send the data in a http message (asynchronous integration). Nifi give us the solution to this and another similar use cases, with the capability to use the "HandleHttpRequest" where some system send me the data in a http request and nifi process this data.

So, this solution is very flexible, and make simple our work. In fact, nifi makes it possible to transform integrations whose development time involves weeks of work transforming into a work of only a few days, even in some cases, hours.

An Architecture example:

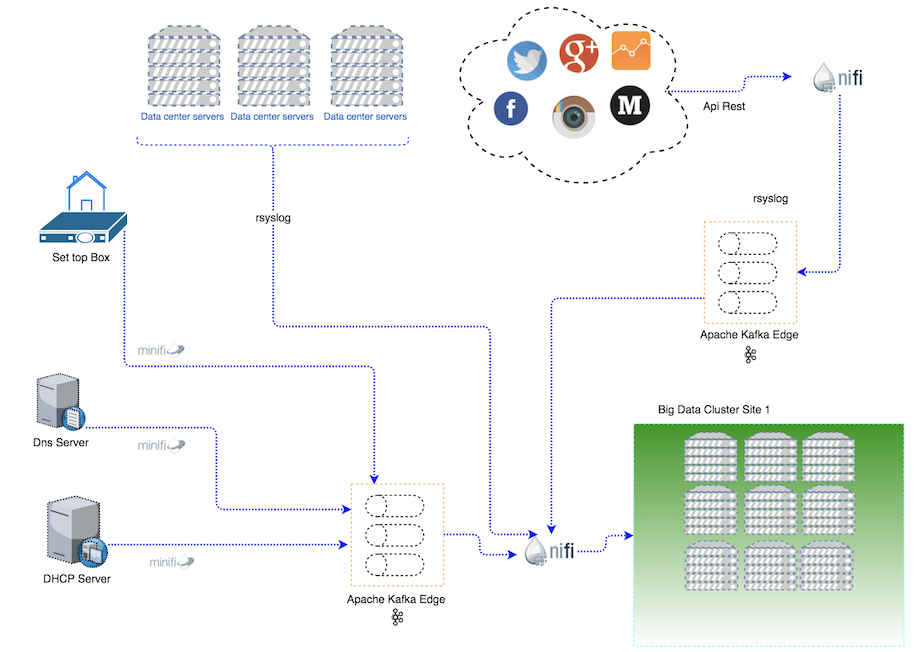

This is a very high level description, but it is fine to understand the examples and the uses cases:

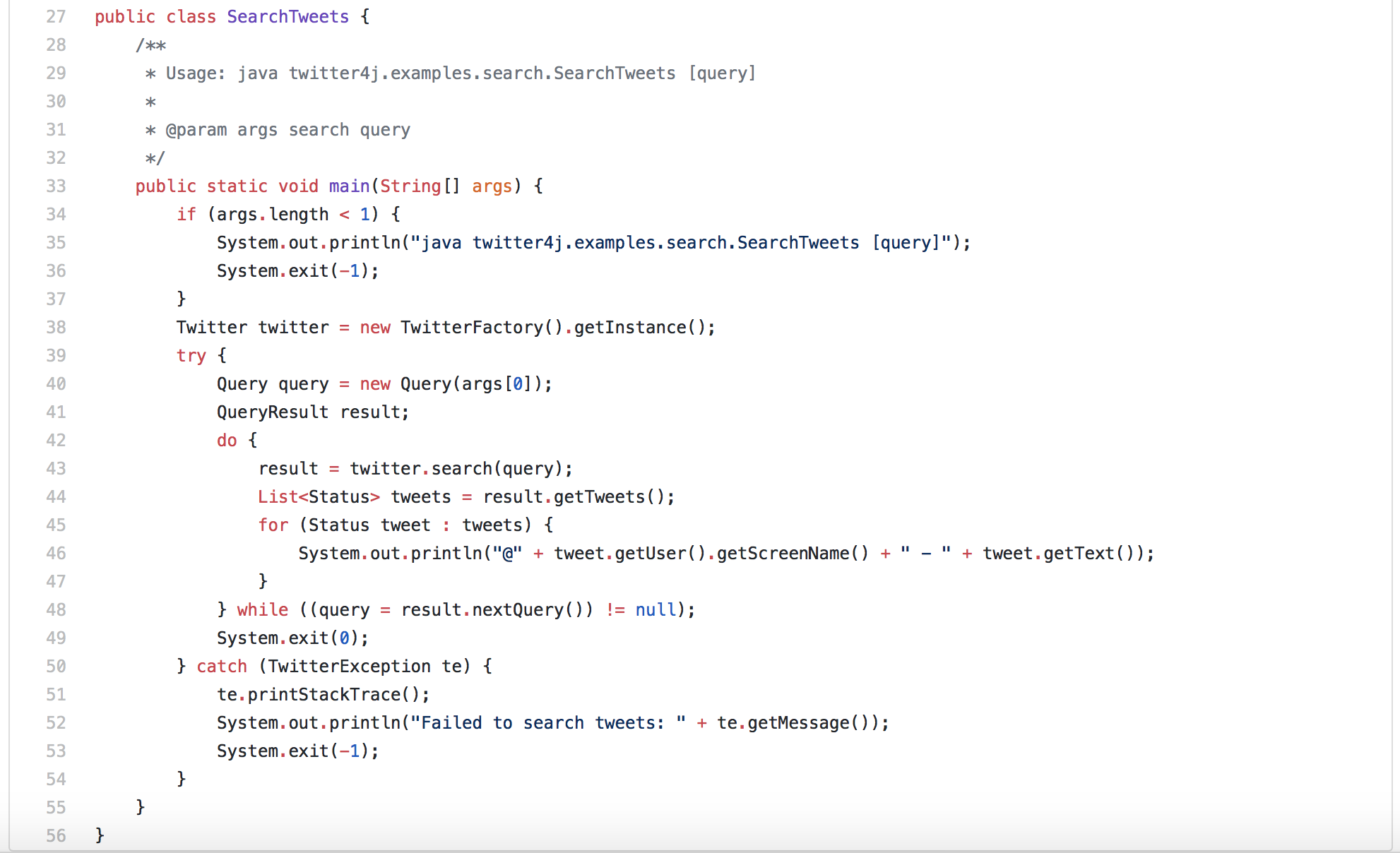

- Social network: You can use it to get data from the social networks api´s. Imagine.... You have a new need from the business related with Twitter. So, the normal way, is use java or python to create the integration. Is something like this:

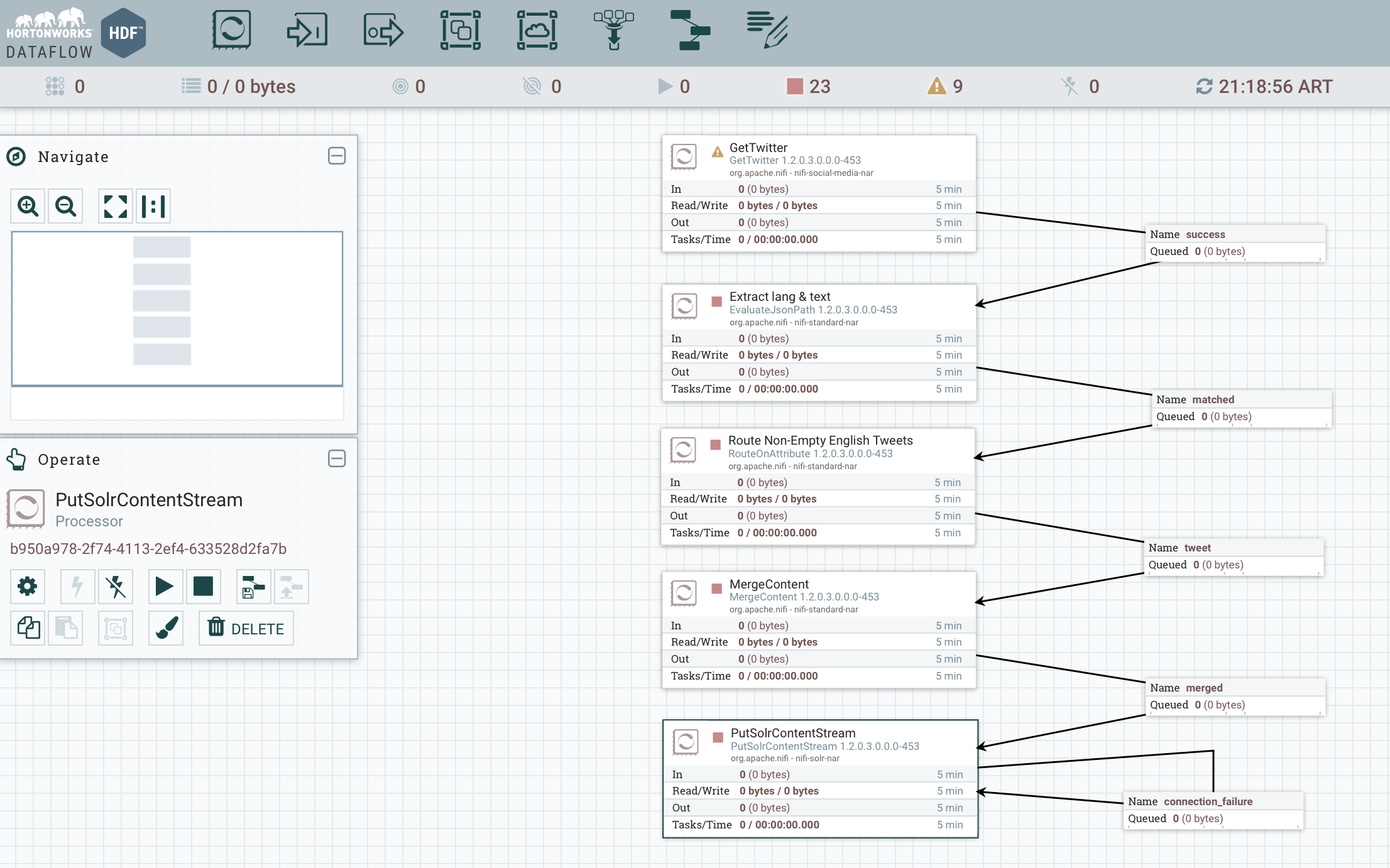

But, think in a big companies where you need to create something in a short time, the operation teams has to understand, monitor and maybe solve a problem with the application, and you use nifi to make reality all this dreams. Look the next image:

This flow shows how to index tweets with Solr using NiFi.

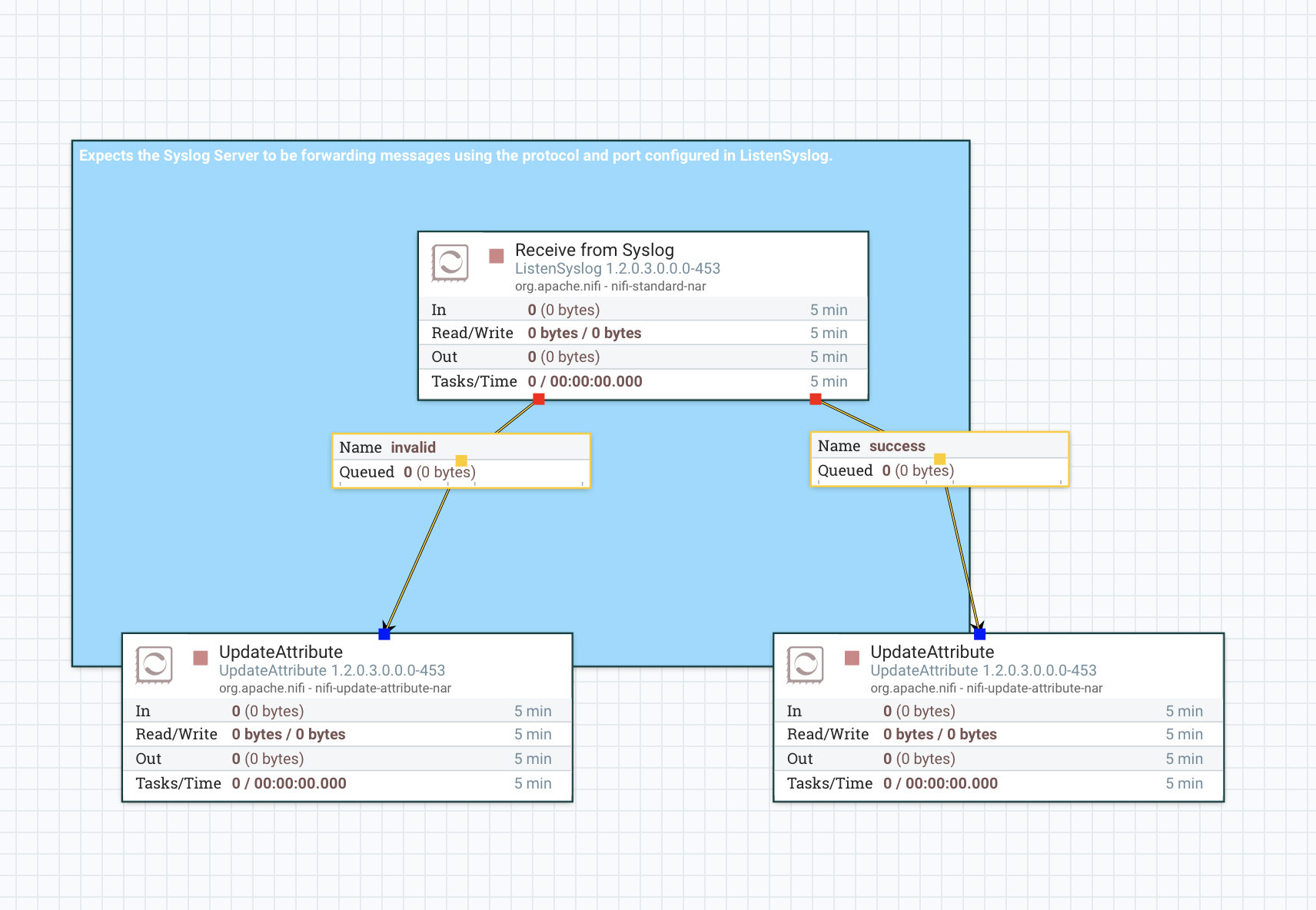

- Data Center Syslogs: You can configure your rSyslog config to send your syslogs to a nifi syslog listener. See the next example

You have to send to the nifi's ip:port address and configure your syslog listener to listen the port you will use to recibe the packets.

- DHCP Logs: You can create a very low latency program with apache minifi for tail the DHCP logs and send it to your kafka topic and next of that read the topic with your nifi cluster and next send it to hbase data base.

This example is available for the set top boxes, where you get a log from the S.T.B and them you send to your kafka topic.

Resume:

The uses for nifi are not infinite, but are many because is very flexible and cover in my opinion the three critical points:

-

Developers teams: Simple to use and learn.

-

Operations teams: Simple to operate, monitor and undertand if the bugs apears.

-

Architecture teams: Simple to use in to many uses cases related to the architecture solutions.

You will get to many benefits from nifi:

- Simplicity for developers.

- Time to market.

- Security.

- Flexibility.

- Easy to understand.

- Scalable.

- Powerfull data flow move.

- The posibility to contract support in hortonworks if you need a help support level 3 | 4.

- And the Open Source Community working to get better functionalities and solve bugs.

Thanks

King Regards,

Contact:

- Linkedin: https://www.linkedin.com/in/martingatto/

- Twitter: https://twitter.com/gattom83

Subscribe to Martin Gatto

Get the latest posts delivered right to your inbox